Demand flexibility among data centers could reduce the need for new gas-fired generation needed to supply their energy consumption while driving development of additional renewables and cutting electricity prices, according to a report by Duke University’s Nicholas Institute for Energy, Environment and Sustainability.

The institute in 2025 released a major paper showing that small amounts of data center flexibility could unlock 100 GW of grid capacity to serve more of the large loads. (See US Grid Has Flexible ‘Headroom’ for Data Center Demand Growth.)

The new paper — “Data Centers and Generation Capacity over the Next Decade: Potential Benefits of Flexibility” — seeks to expand on that theme by looking at how data center flexibility could change the power system, co-author Martin Ross, a senior research economist, said.

“What I normally do is the longer-term capacity planning modeling around policy analysis,” Ross said in an interview. “And I was curious, in that longer-term context, how would flexibility sort of alter the capacity mix going forward.”

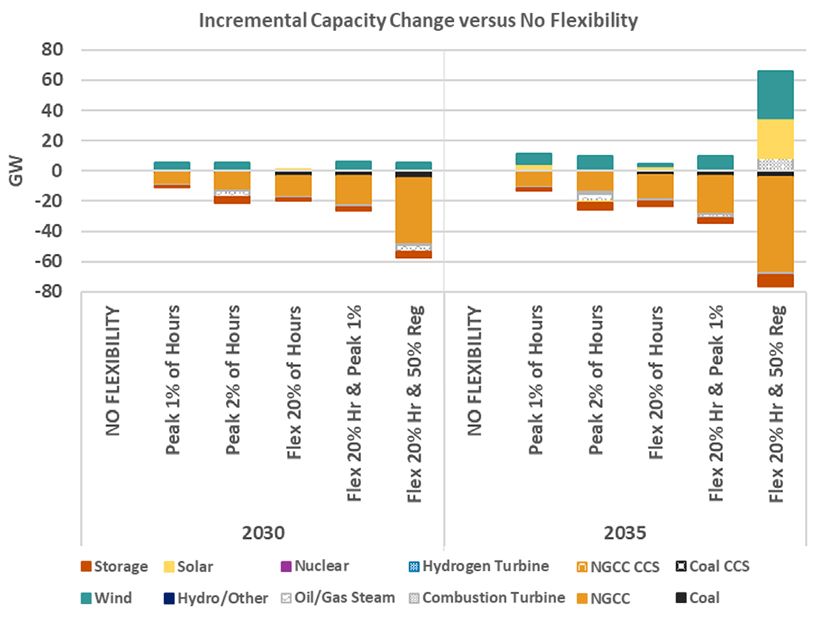

Investments in new combined cycle gas-fired plants are cut by 10-50% in the flexibility scenarios modeled, with the lower end of the range assuming data center demand flexibility that avoids consumption during just 1% of peak hours, the report finds.

The report studies both temporal and spatial flexibility (shifting compute load to other data centers in other regions) to offer the simultaneous ability to further reduce the need for new gas plants.

The flexibility scenarios include ones in which data centers curtail their entire load for 1 and 2% of net peak hours, and other scenarios assuming they can curtail by 20 or 50% during those hours. Other scenarios are combinations, modeling 20 and 50% demand flexibility with the ability, but not the requirement, to completely avoid the system peaks of 1 or 2% of net peak hours. A final scenario uses spatial flexibility to shift 50% of the data center demand in a state to other regions.

Ross focused on combined cycle rather combustion turbine plants because the formers’ higher capacity factors are better suited for steady data center loads.

“Normally you would think if you were reducing stress on the grid, you would first be reducing the need for peaking units,” he said. “But given sort of the overall demand growth that’s being expected in the system, that leads you a bit more towards the combined cycle units and makes the gas peakers a bit less useful, since it’s not efficient to run them all the time as a base load for the data centers.”

Projected savings in capital investment, operational spending and fuel costs over the next decade range from $40 billion to $150 billion. Data center flexibility could see average electricity prices drop by $2.50 to $8/MWh, with retail customers seeing their bills be 0.5 to 2.8% lower.

The report generally found that greater demand response translated into need for fewer gas plants, with the variable demand matching up better with renewable output.

“Combining the 20% hourly flexibility case with the ability to shift up to 50% of each region’s initial data center demands into other regions allows the national grid to avoid up to two-thirds of the new [combined cycle natural gas] units that would have been built by 2035 as data centers move to regions with both less stress on the grid and higher renewable resources,” the report said.

While flexibility offers clear benefits to the grid, it is unclear how much data centers can offer, Ross said. Customer-facing demand for cloud services is generally inflexible, though the compute load for training AI can be shifted around.

“When I think of flexibility, I normally think of flexibility driven by cost savings as the motivation for the flexibility, and I’m not sure that is a major factor in the thinking right at the moment, versus a race to beat all the other models,” Ross said.

Still, if data centers are motivated to offer flexibility for other benefits such as speed-to-market, their flexibility would benefit all customers.

“I was surprised that the flexibility was having fairly significant effects on what data centers might end up paying because you’re avoiding those high-cost hours, and that sort of not only benefits the data centers, but benefits consumers more broadly,” Ross said.