AUSTIN, Texas — Having finally added real-time co-optimization to the market like every other U.S. grid operator with an effort that began in 2019, ERCOT can turn its attention to other pressing issues in 2026.

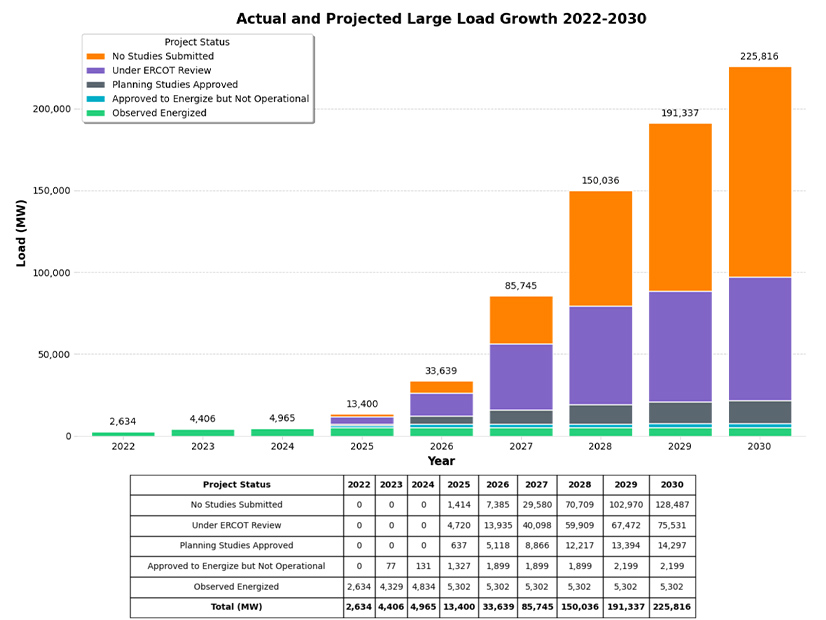

Of course, figuring out the most effective and efficient way to safely interconnect the hundreds of requests from large loads — data centers, bitcoin miners, large industrial facilities and the like — that have flocked to Texas’ welcoming arms tops the list. The grid operator began the year with 63 GW of interconnection requests in its large-load queue but enters 2026 with more than 233 GW, up 269%. Data centers account for about 77% of that load.

Then there’s ERCOT’s continuing work on a dispatchable reliability reserve service (DRRS), a product that staff call an ancillary service but that some stakeholders don’t. It is the third iteration of the product, mandated by state law in 2023 and a high priority for the Board of Directors and the Public Utility Commission.

A little less sexy initiative but equally important is the full-scale analysis that will take place in 2026 of the grid’s reliability standard. It will be the first formal evaluation of the new reliability standard the PUC established in 2024.

But wait. ERCOT isn’t finished with RTC. Nearly a dozen issues and tweaks have been identified to stabilize the market mechanism, requiring the task force that deployed RTC to stay active.

CEO Pablo Vegas says ERCOT is going through a transition “characterized by high and very rapid growth” of intermittent and short-duration supply resources.

“It’s characterized by a rapidly changing customer base that includes price-responsive loads like crypto miners, rapidly growing large-scale data centers, and continued penetration of distributed energy resources throughout the grid,” he told his board in December. “It’s a significant shift in operational requirements, and it represents an opportunity to create a more resilient and cost-effective grid for the benefit of all Texans.”

Vegas says ERCOT’s load growth is “fairly unprecedented” and renders obsolete historical interconnection processes. As of November, the ISO had energized only a little over 5 GW of large loads in 2025. To remedy that, Vegas and other members of his leadership team proposed a new approach to interconnection called a “batch study” process. (See ERCOT Again Revising Large Load Interconnection Process.)

Projects ready to be studied will be grouped together in batches and allocated existing and planned transmission capacity. ERCOT says this will provide large-load customers with study efficiency, consistency, transparency and certainty. The first group, Batch 0, will create a foundation and baseline for subsequent batches, building on the assumptions that have changed from the previous group.

Staff will develop the batch study’s framework, taking input from market participants and regulators. ERCOT has rolled out a stakeholder engagement plan during January and February that includes six presentations to the PUC and stakeholder groups. It plans to file a proposed study process framework for discussion before the commission’s Feb. 20 open meeting.

“There’s clearly a pressure to move quickly and support the economic growth that’s coming our way,” Vegas told the PUC in December.

ERCOT Tries Again with DRRS

There’s also pressure on ERCOT to produce the DRRS product, mandated by House Bill 1500 in 2023. The law requires the grid operator to develop DRRS as an ancillary service and establish minimum requirements for the product.

Lawmakers followed up by directing the PUC to revise ERCOT’s original protocol change to establish DRRS as a standalone ancillary service. The new direction resulted in allowing only offline resources to participate and the change was withdrawn.

ERCOT now has filed a protocol change (NPRR1309) that meets all statutory criteria and improves the previous change by allowing online resources to also participate in DRRS. The new design enables the product to be awarded in real time and co-optimized its procurement with that of energy and other ancillary services under RTC.

An accompanying protocol change (NPRR1310) adds energy storage resources as DRRS participants and a release factor so the product can support resource adequacy. NPRR1309 has been granted urgent status and is due before the board for its June meeting. The same status has not been accorded to NPRR1310.

“We recognize there’s likely to be a lively stakeholder debate,” Keith Collins, vice president of commercial operations, told the board in December. “We are optimistic that it can move through the stakeholder process expeditiously, but we didn’t necessarily want to burden it with a timeline for that.”

ERCOT contracted Aurora Energy Research, which has a large local presence, to study future resource adequacy conditions and the effect of different market designs, including variations of DRRS. The research firm determined that DRRS’ design adds more cost-effective dispatchable capacity and provides greater resource adequacy benefits in different load and extreme weather conditions. (See ERCOT: New Ancillary Service Key to Resource Adequacy.)

During a December workshop to review the report, stakeholders peppered Aurora staff with questions on the study. DRRS is meant to achieve a revenue goal, not an operational goal, the firm’s representatives said as stakeholders questioned whether it is an ancillary service.

Collins said the DRRS mechanism and its eligibility requirements strengthen reliability through ancillary services, whereas ERCOT’s operating reserve demand curve, about 10 years old, uses energy to improve reliability.

“In our mind, [DRRS] is using ancillary services to achieve reliability, so it is an ancillary service plus,” he said. “I’m not aware of any other market that has a tool quite like that.”

Saying he doesn’t understand how an ancillary service could ever procure 100% of eligible capacity, energy consultant Eric Goff, who represents the consumer segment, said, “It seems like that’s a stretch to call it an ancillary service.”

The workshop signaled the conversations that will happen over the next few months. ERCOT has scheduled another workshop for the Technical Advisory Committee on Jan. 7.

“Obviously, there’ll be more discussion on 1309 and 1310 next month,” Collins said.

Strengthening the Grid

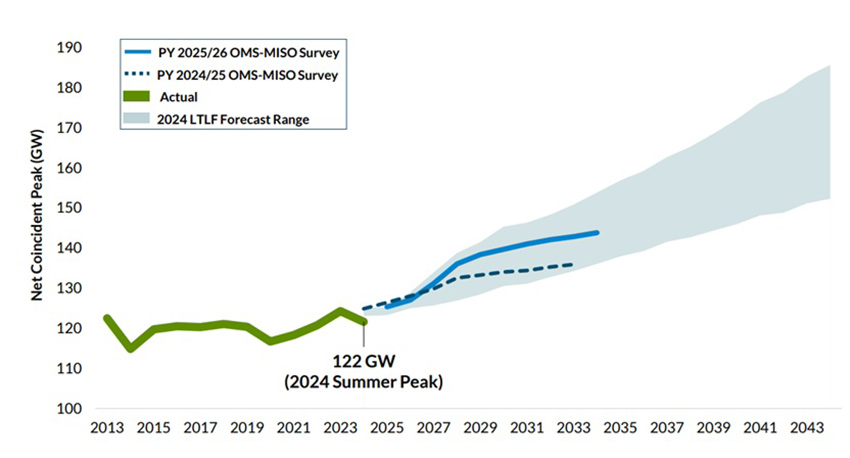

After 2021’s devastating Winter Storm Uri and the legislative session that followed, the PUC ordered ERCOT to create a reliability standard as a performance benchmark to meet consumer demand for three years into the future. The standard is composed of three criteria to gauge capacity deficiency: frequency (not more than once every 10 years), magnitude (loss of load during a single hour of an outage) and duration (less than 12 hours).

ERCOT and its Independent Market Monitor are required to evaluate the costs and benefits of any market design changes proposed to address deficiencies identified through the assessment process. The first such reliability standard assessment will be conducted in 2026 and then every three years and will include a forward review and analysis of the generation mix.

Vegas said in December that additional supply has been “helpful” in improving the grid’s reliability characteristics.

“In the long term, there is increasing risk if the load materializes and infrastructure development doesn’t keep up,” he told the board.

ERCOT has deployed what it calls its “most significant” design change since its nodal market went live in 2010. The grid operator went live with RTC in early December and it has been successfully procuring energy and AS in real time every five minutes ever since. (See ERCOT Successfully Deploys Real-time Co-optimization.)

“Mission accomplished. It was absolutely brilliant,” ERCOT’s Matt Mereness, who chaired the stakeholder group managing the effort, told the board in December.

The ISO says new functionality, which also improves the modeling and consideration of batteries and their state-of-charge in participating in RTC, will yield more than $1 billion in annual wholesale market savings.

However, there’s still work to be done stabilizing RTC and transitioning to normal processers. Staff and stakeholders have identified nine issues to further evaluate in 2026. Those issues run from reviewing the ancillary service demand curve to evaluating concerns with AS deliverability and will be transferred to TAC.

ERCOT has identified five likely protocol violations and mitigation plans with the PUC and has filed a protocol change (NPRR1311) to reverse language allowing ancillary service prices above the $5,000/MWh cap during emergency conditions.

Mereness said the plan is to have everything resolved by Jan. 31. The grid operator will spend the first few months of 2026 releasing updates for remaining non-critical defects.

RTC’s successful implementation is another plus for ERCOT and Vegas. He told the board during its year-end meeting that the ISO is determined to be the “most reliable and innovative grid in the world … in the world.” (See “Vegas Sets Lofty Goal,” ERCOT Board Approves $9.4B 765-kV Project.)

“We are one [of the best], if not the leading, grids globally when it comes to operational and technical complexities,” Vegas said. To be successful, we need to be a clear leader on a stage that represents the entirety of this planet.”

As part of its strategy to “advance knowledge sharing in grid innovations,” ERCOT is hosting its third annual Innovation Summit on March 31 at a resort near Round Rock, Texas, where “visionaries, thought leaders and innovators” share ideas to address “challenges and opportunities facing grid operators around the world.”

Or those thought leaders could just ask ERCOT staff, who already may be there.